UX Research for Agile AI Product Development of Intelligent Collaboration Software Platforms

The rush to adopt AI collaboration tools has created a paradox. Teams deploy intelligent assistants, LLM-powered meeting summarizers, and AI-driven project management features at record speeds, yet few organizations systematically measure whether these tools genuinely improve collaboration. I've watched teams celebrate velocity gains while missing subtle ways AI changes decision quality, team trust, and collaboration depth. According to PwC's AI Agent Survey, 88% of executives plan to increase AI budgets over the next year because of agentic AI's potential, yet measurement frameworks lag far behind investment (PwC, 2025).

The missing piece isn't more AI features. It's better ways to understand their human impact. The real question: do LLM-based automations make teams more effective at solving complex problems, or do they introduce friction that offsets productivity gains?

Having spent years researching how teams interact with intelligent collaboration platforms like Microsoft Teams and Cisco Webex, I've developed a systematic approach to answering this question. The framework I present here addresses a critical gap in how organizations evaluate and build AI-powered collaboration tools.

Beyond Surface Metrics: Five Dimensions That Matter

Traditional agile metrics like velocity, sprint completion rates, and cycle time tell an incomplete story when AI enters the collaboration stack. Through extensive field research with cross-functional product teams, I've developed a UX research framework built on five dimensions that capture what genuinely matters for team effectiveness in AI-augmented environments.

1 - Cognitive Load and Context Switching

This dimension examines mental effort when teams move between AI tools and traditional platforms. Teams often adopt multiple specialized AI assistants without considering the cumulative cognitive tax. In my research on intelligent collaboration platforms, I've found this to be the most underestimated factor in AI tool adoption failure.

I measure this through diary studies, cognitive walkthroughs, and quantitative assessments. Key metrics include time-to-decision after consulting AI tools, error rates when transitioning between tasks, and mental strain during sprints.

Here's what teams underestimate: verification overhead. When an LLM generates a user story, developers mentally compare it against requirements, check edge cases, and validate assumptions. This is real cognitive work that stays invisible in dashboards. In one study of I led across 4+ development teams using an AI-powered collaboration copilot, verification tasks consumed 23% of the time savings the tool was supposed to provide.

Agile AI product development impact: Software developers benefit when AI reduces context-switching during sprint execution, maintaining flow state while automating administrative tasks without verification bottlenecks.

2 - Trust Calibration and Verification Burden

This dimension focuses on how teams develop appropriate trust in AI outputs. The challenge isn't just whether AI works. It's whether teams know when to trust it and when to question it. Gartner's research on human-AI collaboration confirms this challenge at scale: the quality of collaboration between humans and AI will determine enterprise performance in the coming years (Gartner, 2025).

Through think-aloud protocols and retrospective interviews, I track verification time, over-reliance incidents, and dismissal rates of valid suggestions. What I've seen repeatedly across intelligent collaboration platform deployments: teams cycle through "trust oscillation." They start with excessive trust, get burned after errors, then gradually recalibrate. This pattern appears consistently whether teams are using AI meeting assistants, automated backlog tools, or intelligent documentation systems.

Agile AI product development impact: Teams that establish clear LLM output validation protocols during sprint planning reduce rework cycles by catching flawed recommendations early.

3 - Collaborative Sensemaking

AI changes how teams make sense of complex problems. When LLM-powered assistants auto-generate action items or highlight blockers, teams spend less time synthesizing information collectively. But does efficiency come at the cost of shared understanding?

I use conversation analysis, communication pattern mapping, and ethnographic observation. Metrics include discussion depth, consensus quality through decision durability, and perspective diversity.

In one study of a team using AI-generated meeting summaries on their intelligent collaboration platform, async catch-up time dropped by 40%, but decisions had a 23% higher revision rate in subsequent sprints. Teams developed what I call "summary confidence," believing they understood discussions from AI notes when nuance had been compressed away. This finding has significant implications for how we design AI features in collaboration software.

Agile AI product development impact: Teams that preserve synchronous sensemaking for architectural decisions while using AI for documentation maintain decision quality without sacrificing velocity.

4 - Knowledge Distribution and Skill Development

This dimension examines whether AI democratizes expertise or creates knowledge silos. In agile environments, cross-functional collaboration depends on shared understanding. Intelligent collaboration platforms that embed AI agents must be evaluated not just on task completion but on how they affect team learning dynamics.

I measure through skill assessments at sprint intervals, pair programming observations, and learning curve analysis. What I've found: AI tools can accelerate junior developers in routine tasks but potentially slow mastery of underlying principles. Teams need to design intentional learning moments when AI assistance is set aside.

Agile AI product development impact: Cross-functional teams benefit when AI agents democratize technical knowledge, enabling designers and product managers to understand implementation constraints without deep engineering expertise.

5 - Alignment With Actual User Needs

This dimension connects AI tool adoption to user-centered outcomes. The ultimate test of any development practice: does it help teams build better products?

I track AI usage intensity against user satisfaction scores, feature adoption rates, and feedback sentiment. Teams using AI to automate administrative overhead often redirect time toward user research. Teams using AI as a replacement for human judgment drift from user needs. This pattern holds across every intelligent collaboration platform I've studied.

Agile AI product development impact: Measuring the connection between AI usage and user satisfaction ensures sprint acceleration doesn't compromise feature value.

Case Study: Evaluating an Intelligent Collaboration AI Copilot in Practice

\

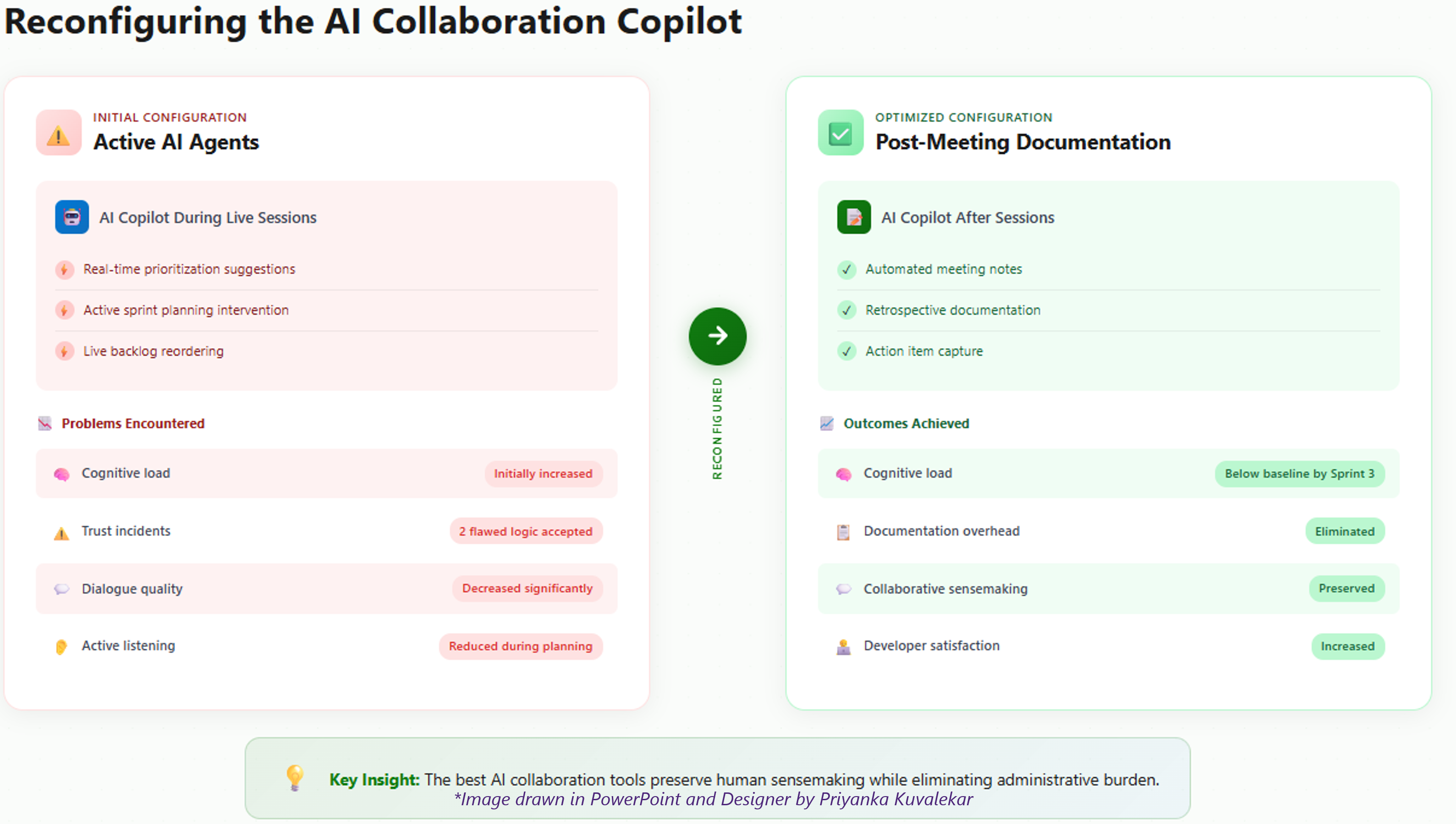

\ To show these dimensions in action, consider a cross-functional product team that adopted an LLM-powered collaboration copilot within their enterprise communication platform. The copilot offered sprint planning assistance, automated retrospective notes, and deployed specialized AI agents for backlog prioritization.

I designed an eight-week study across three sprint cycles. The baseline sprint used traditional practices. Sprints two and three introduced the copilot: first as a passive observer, then deploying active AI agents making prioritization suggestions.

Cognitive load initially increased, then dropped below baseline by sprint three. Trust calibration proved challenging, with two incidents where teams accepted flawed AI agent logic, followed by overcorrection. Most significantly, dialogue quality decreased as the copilot handled notetaking, reducing active listening and discussion depth.

The team reconfigured the copilot as a post-meeting documentation tool rather than deploying active agents during live collaboration. This preserved efficiency while protecting collaborative sensemaking. Software developers appreciated eliminating documentation overhead without fragmenting attention during planning.

This case illustrates a pattern I've observed across multiple intelligent collaboration platform deployments: the highest-value configuration often differs substantially from the vendor's default setup. UX research that measures human-centered dimensions reveals optimization opportunities invisible to technical metrics alone.

Methodological Considerations for UX Research in Agile AI Product Development

\ Rigorous UX research on intelligent collaboration platforms requires adapting methodologies to agile cycles. Baseline establishment matters: without measuring pre-AI workflows, teams can't distinguish genuine improvements from placebo effects.

Longitudinal design aligned with sprint cadences is essential. I recommend minimum observation periods of three sprint cycles to capture adoption, calibration, and stabilization phases. Multi-stakeholder perspectives prevent one-sided assessments, since AI impacts different roles asymmetrically. In my experience, product managers, developers, and designers often have divergent perceptions of the same AI tool's value.

Anthropic's research on AI adoption patterns shows that enterprise API users are significantly more likely to automate tasks than consumer users, with automation now exceeding augmentation in overall usage patterns (Anthropic, 2025). Combining telemetry data with ethnographic insights reveals not just what teams do with AI tools, but why those patterns emerge in agile environments.

For researchers studying intelligent collaboration platforms specifically, I've found that communication pattern analysis provides uniquely valuable insights. Tracking changes in message frequency, response latency, and conversation thread depth before and after AI feature introduction reveals subtle shifts in team dynamics that surveys miss.

What This Means for AI Product Development Teams Today

For UX researchers in agile AI product development, this framework provides a structured approach to evaluating intelligent collaboration platforms. Rather than relying on vendor promises or surface metrics, teams can assess whether tools improve the human dimensions that matter for sprint effectiveness.

For software developers and product teams building AI-powered collaboration features, the framework suggests focusing less on automation breadth and more on understanding how features change team dynamics. The best intelligent collaboration platforms amplify human judgment, reduce cognitive overhead rather than shifting it, and preserve the collaborative sensemaking that agile depends on.

As AI agents become autonomous, participating in standups, writing tickets, and making prioritization decisions, measurement frameworks must evolve beyond efficiency. PwC found that 28% of executives rank lack of trust as a top challenge to realizing AI agent value, and 67% expect AI agents to drastically transform roles within 12 months (PwC, 2025). Gartner predicts that by 2026, 40% of enterprise applications will integrate task-specific AI agents, up from less than 5% today, transforming how teams collaborate through these platforms (Gartner, 2025).

Organizations investing in rigorous UX research on intelligent collaboration platforms will navigate this transition better than those chasing the latest LLM features without measuring human impact. The methodology I've outlined here provides a foundation for that research.

Key Takeaways

- Traditional agile metrics inadequately capture the human impact of intelligent collaboration platforms, requiring new measurement frameworks focused on cognitive load, trust calibration, collaborative sensemaking, knowledge distribution, and user outcome alignment

- Longitudinal UX research across multiple sprint cycles reveals patterns invisible in single-point assessments, including trust oscillation and the delayed impact of LLM-mediated communication on decision quality

- Software developers benefit most from AI collaboration copilots that automate documentation and administrative overhead while preserving the depth of human collaboration needed for architectural decisions and complex problem-solving in agile product development

\

Mohlo by se vám také líbit

U.S. Banks Near Stablecoin Issuance Under FDIC Genius Act Plan

Turmoil Strikes Theta Labs with New Legal Allegations